For those who must conduct such experiments, however, there are guidelines for balancing the tradeoff between efficiency and reliability (or, the probability that an independent study will replicate your results). In a study conducted by Thirion et al (2007), a large sample of 81 subjects was partitioned into different numbers of subgroups: 2 groups of 40 each, 3 groups of 27 each, 4 groups of 20 each, and so on, to test whether there is a noticeable cutoff for reproducible effects below a specific group size. Further parameters were tested, such as group-level variability and and sensitivity of different p-thresholds.

The authors found that an optimal number of subjects to balance both reliability and statistical sensitivity (that is, the ability to detect an effect that is actually present) is about N=25-27, with diminishing returns after that. In addition, the authors counsel the use of mixed-effects models, which take into account variance from first-level analyses (i.e., individual subjects), and downweight subjects with high variability. This procedure is similar to the one employed in FSL's FLAME and AFNI's 3dMEMA.

|

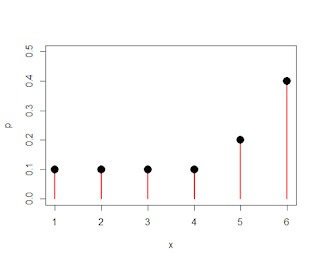

| Figure 8 reproduced from Thirion et al (2007). Both Kappa (a measure of reliability) and activated voxels increase significantly up to around 27 subjects, with a plateau shortly after that. |

As a side note, besides merely testing for statistical significance (which is virtually guaranteed with a large enough sample), effect sizes should also be calculated to measure the...well, size of your effect. Essentially all an effect size is, is quantifying the magnitude of the difference between your calculated mean and the null hypothesis mean, in terms of standard deviations. The following table will help you qualify how big your effect is when describing the result:

0-0.3: Wee

0.3-0.5: Not so wee

0.5+: Friggin' HUGE

More details, along with a description of why cluster-thresholding is a better methods than whole-brain corrected voxels, can be found in the original paper.